Maximilian Du

"And still 24 hours, maybe 60 good years, it's really not that long a stay..." -JB

Hey there! I am a second-year Ph.D. student at Stanford University working with Shuran Song in the Robotics and Embodied AI (REAL) Lab. I'm broadly interested in how robots can learn and reason like humans and animals. I am grateful to be supported by the Knight-Hennessy Fellowship and the NSF Graduate Research Fellowship.

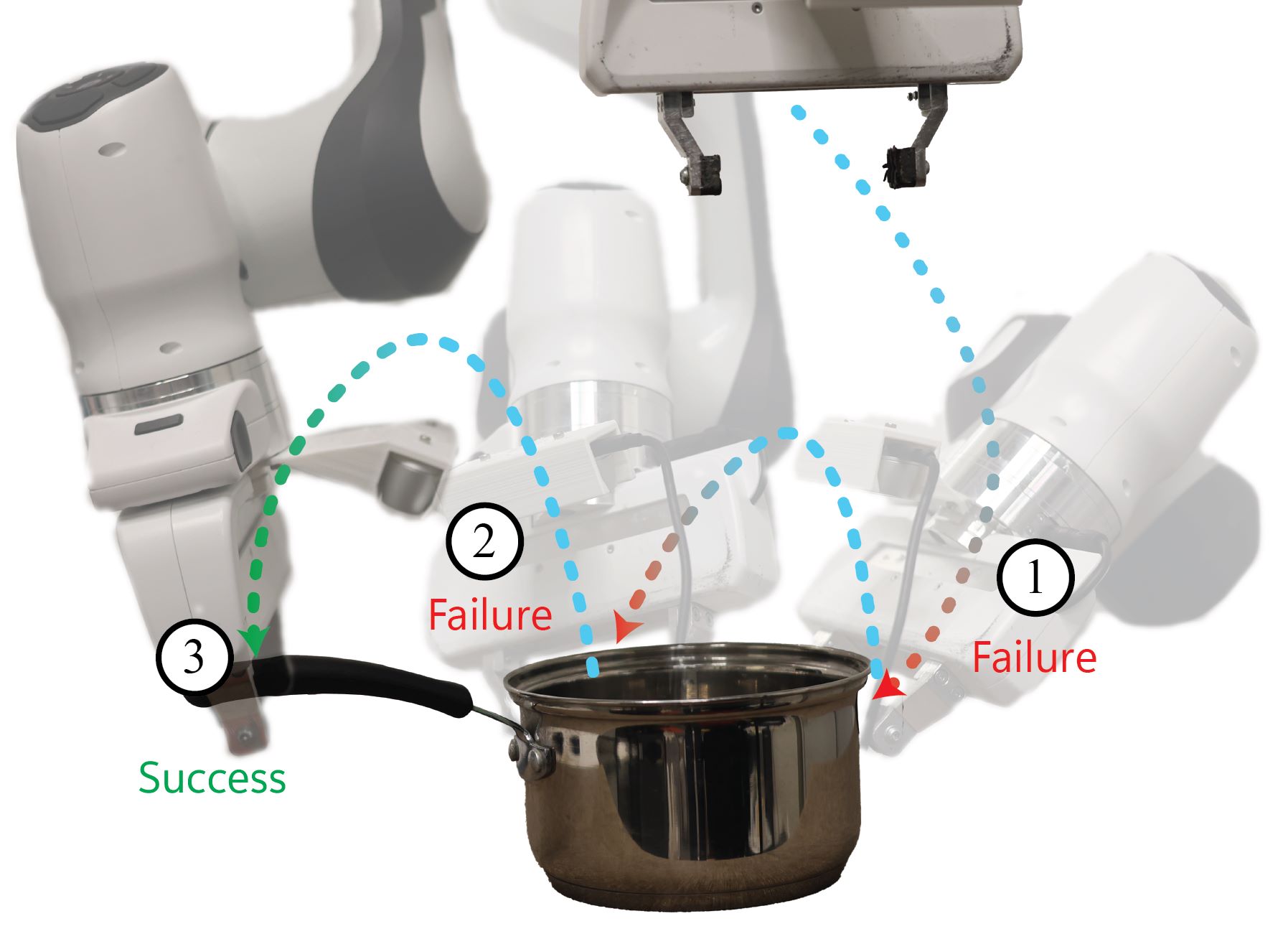

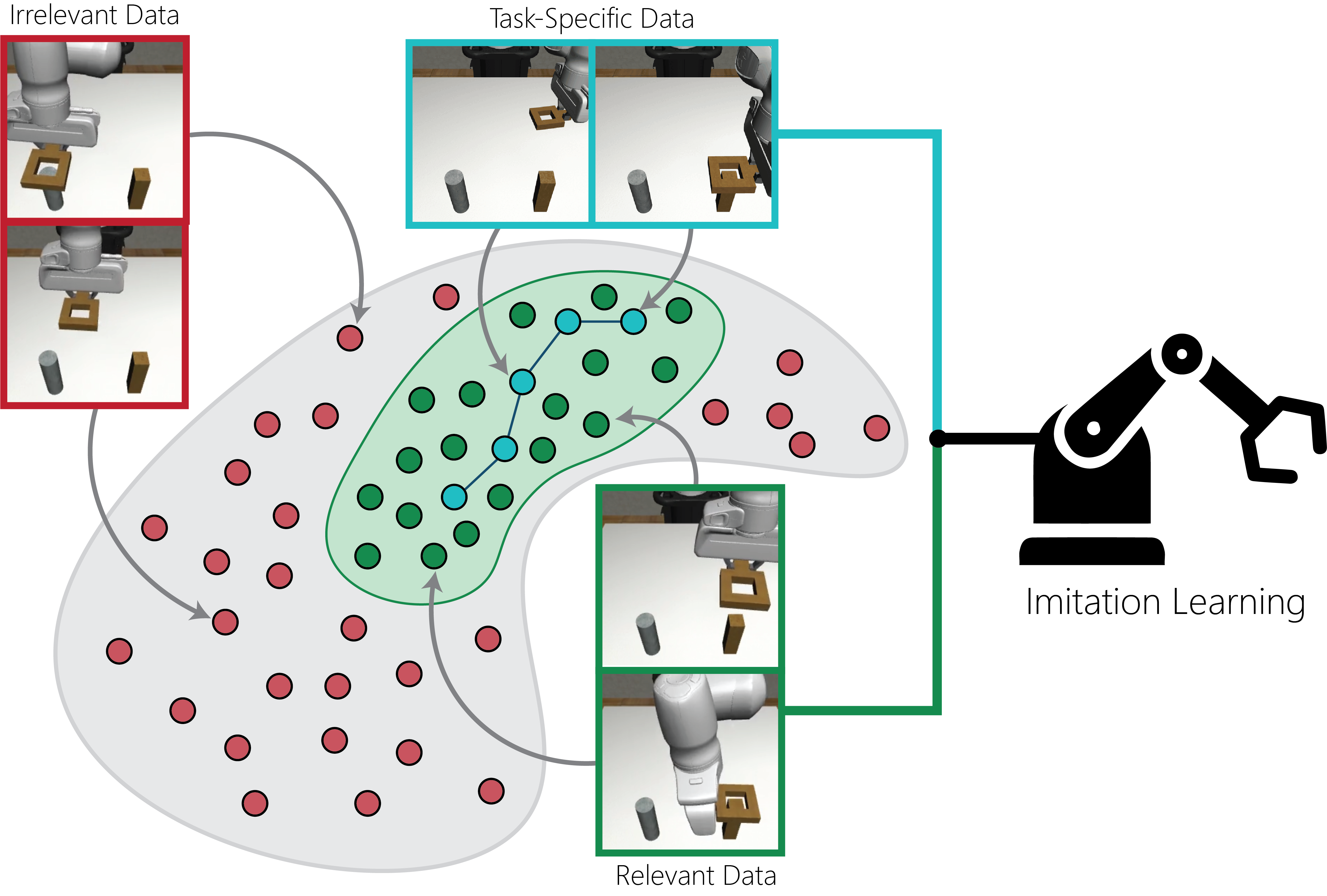

I completed my undergrad degree in computer science at Stanford, where I conducted research in Chelsea Finn's IRIS lab on imitation learning methods. In the months before my PhD, I interned at the Navy Marine Mammal Program, where I worked hands-on with dolphins and sea lions. This once-in-a-lifetime experience convinced me that a better understanding of animal cognition will be critical in crafting better AI algorithms.

Beyond the robot lab and my continued involvement with the Navy dolphins, I'm a writer and an oral historian. Sometimes this journey takes me to advocate for animal care professionals, their animals, and responsible zoological facilities. But most of the time, I enjoy writing short stories. I hope you can read them soon.