PROOF TRICKS

| Tags | CS161 |

|---|

Randomized Algorithms

Try representing success as a geometric random variable with some probability . The expected runtime is therefore

Remember: you want , not

Decision Trees

Remember the tips on constructing a decision tree. Also, Stirling’s approximation can be your friend here

Trees

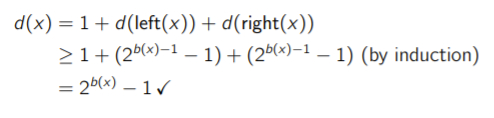

for a red-black tree, try keeping track of , which is the black height, and , which is the number of descendants at node . When you’re trying to prove the upper bound of height, you might use the inductive hypothesis that , which yields the correct inductive step

You finish the height proof by taking the log of both sides and using the fact taht , because you can’t have two red nodes in a row.

Hashing

You want to show that

where is randomly selected. You can imagine as a random variable of parameters, and as being . So, essentially given the worst possible adversarial example, the chance of collision is still essentially random. It doesn’t mean that there is no collision; in fact, certain selections of WILL create a collision.

Graph algorithms, generally

Runtime analysis

In general, search algorithms take if they touch the vertices and the edges. In a connected graph, we have . We add the to account for cases where you have more vertices than edges, which can happen. Intuitively, you “touch” a vertex on your way to another vertex. If you have to start at a new vertex, you don’t traverse an edge but you should still count this starting as contributing to the complexity.

Induction

Induction is a popular choice for proving things. You might build things up, which is a common way of doing things.

Cycles, trees, etc

- Odd cycles can’t be colored with two colors

- Trees are fully connected but have no cycles

- bipartite graphs are connected across the sides but have no edges that go between same-side nodes

Timing

Remember that if a node finishes after another node in the same connected component, then that second node must be a descendant of the first node.

Contradiction

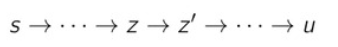

In djikstra’s algorithm, we showed that when a vertex is marked done, . We show this by contradiction. We propose that is the last vertex such that . More specifically, let’s consider the shortest path.

-

-

- (the main claim of contradiction)

- therefore,

- Therefore, if we picked , then it must be the case that is marked as done.

- If is done, then it would have updated its neighbor such that

- We are in the shortest path already, so . As such, has the equality, and it contradicts our original assumption.

Dynamic Programming

The proof is typically the root of your work, because you want to essentially create an inductive definition. So proving it is often trivial.

Here are some DP tricks

- If you are given limited resources, you should consider creating a subproblem that has even more limited resources. For example, you might have a knapsack with a lower limit, etc

- Try to find good moves now. This typically involves using a operator. This is not a greedy algorithm, because it often requires you to try many things out

- Think carefully about the base case. If you are doing something like , if you provide a nonsensical base case, the whole thing will collapse

Greedy Algorithms

The proof is typically the hardest part. I like to think of it as a two-fence system, where you want to show that you can swap out the current optimal choice with the greedy choice and it still makes sense

Start with the greedy and optimal agreeing up to this time step

- First fence is some kind of bound on what the optimal algorithm can choose, because the greedy algorithm typically maximizes something

- Second fence is some kind of bound on optimality

If you did it correctly, you will end up with some sort of “soft” area in which you can switch things around until you get to the greedy choice

Example: picking the most events in a time block. The greedy algorithm picks the next viable activity with the smallest finish time. Therefore, the optimal algorithm can’t pick an activity with a smaller finish time. By definition, we can just swap out the greedy algorithm’s choice, as it doesn’t overlap (it just makes a larger gap). In this example, the first fence is that the greedy algorithm’s choice fits inside the optimal algorithm’s choice. The second fence is that we make an equivalent swap so optimality is kept.

Graph cuts

To prove facts about MST, use these facts

- Trees are connected

- An arbitrary cut in a graph that has an MST will cut one edge of the MST.

- You can switch out any edge that spans the cut for this one MST edge, which means that the MST edge must be the light edge

To prove facts about flow, use these facts

- Maximum flow is equivalent to minimum cut