Multiplication

| Tags | AlgorithmsCS161 |

|---|

Grade school multiplication

In grade school multiplication, we multiply together numbers (think about it). Can we do better?

Divide and conquer: a start

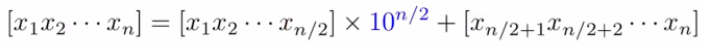

For some number of magnitude , given that is even, we can split it up like this:

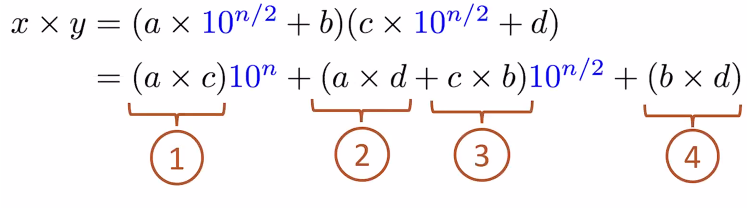

And this means that multiplication becomes

The key insight is that a one n-digit multiplication can be written in terms of 4 digit multiplications!

Recursing

You can think of a very simple recursive algorithm that uses this insight. The base case is when , in which you compute a one-digit product.

We assume that is a power of 2 to help us. If is not a power of 2, we need to augment the splitting somehow. Here’s the insight: instead of worrying about if is even or not, we focus on if the two numbers are of the same size. If they are not the same size (which will happen when is odd and you split), you pad the numbers with leading zeros until they are of the same size. Now, this size could be even or odd. If they are even, then the next cycle will get a clean split. If they are odd, the next cycle might get a bad split but more padding will fix it.

Why does this work? Well, leading zeros do not change the properties of the numbers. Some of the base cases will involve multiplying numbers by zero, which does waste computational resources but it makes the problem feasible.

Does this help us?

Well, here’s some facts. Every step, we reduce the problem by half. We also increase the number of problems by 4. Therefore, at the end we reduce by times, but we increase the number of problems by times, which is equivalent to . Hmmm.

It actually appears that we’ve done all this work for nothing! But we haven’t made zero progress. The key insight is that we are only stuck because the branch factor is 4. Can we reduce this number?

Karatsuba algorithm

Here’s the key insight. We need to recurse on 3 problems instead of 4. To do this, we compute (using our previous terminology) . The general approahc is to break it up into 9 n/3 sized problems, and then find 5 distinctive products of n/3 such that you can generate the 9 n/3 products through subtraction and addition. It’s a fun little puzzle.

This is because , and we need . Therefore, we can just compute to get this term. And are used in the other two parts of the expansion. So we do indeed only compute three multiplication operations!

And this gets us , which is equivalent to , which is around . This is a lower order!

Other approaches

Toom-Cook proposed an algorithm that breaks something into five n/3-sized problems, which yields a complexity of .

Schonhate-Strassen in 1971 proposed an algorithm that runs in time.

Furer in 2007 propsoed an algorithm that runs in

Harvey and van der Hoeven in 2019 proposed an algorithm that runs in time.