Decision Trees

| Tags | CS161Complexity |

|---|

The big idea

We can use decision trees to show upper bounds on sorting algorithms.

Decision tree

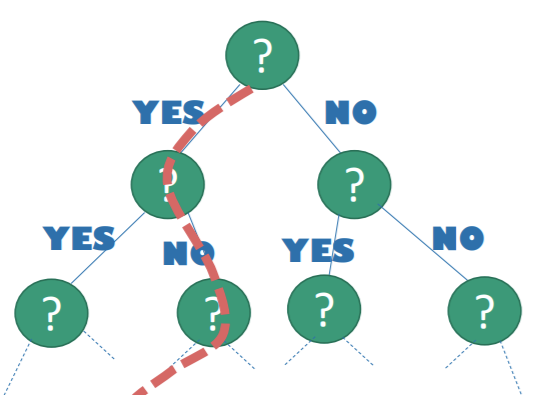

In comparison based sorting, we are allowed to compare two elements at a time and understand if one is larger or smaller than the other. You can think of this as a decision tree in which each node is a question, and each answer gets you to another question until you reach the end.

Minimal complexity

Now here’s the crux: at the end, the algorithm spits out a sorted array. If the array of size , then there must be sorted array at the leaves of the tree. If we started with one question (which we do) and if the tree is binary & balanced, then the longest path must be

From Stirling’s approximation or the neat trick with log factorials, we get hat .

So if the general comparison based sorting algorithm is lower bounded with in the worst case, then we can assert that all such algorithms must run in at least . Cool!

Randomized algorithms

You can actually prove that the expected runtime of randomized algorithms like Quicksort also have the same lower bound. However, we will not do it here (a little beyond the scope of our discussion)

Understanding the tree

One Philosophy

Perhaps this is the most unintuitive part of the whole thing. How do you even begin thinking of a problem in terms of a decision tree? Well, it always helps to think of the number of possible inputs and the number of possible outputs.

Here’s the general rule of thumb: the number of leaf nodes is the input size divided by the number of inputs that can reach one output.

It’s best to think of a leaf node as containing the “answer” to something. Let’s look at a few examples

- Sorting a list: the leaf node contains a list of indices that map an input to sorted output

- Select top-K in a list: the leaf node contains a list of indices that are the top-k in the list

- Merging sorted list: the leaf node contains a list of insertion orders

- -sorting (in which the sorted number may be within a certain radius of the actual location): the leaf node contains a list of indices that map to an approximately sorted output

Now, let’s think about the number of inputs that can reach one output. Practically speaking, this is the number of inputs for which, after you apply the leaf node’s list of indices, yield a correct answer.

- Sorting a list: there can only be one ordering of numbers that work with the root node’s sorting plan. So, there are leaf nodes

- Select top-K in a list, sorted. If you specify the indexes of top-k, the other numbers can be permuted any way you want. So there are leaf nodes

- Select top-K in a list, unsorted. If you specify the indexes of top-K, firstly, there are different permutations of top-k elements that yield the correct answer. Second, there are different permutations of non-top-k elements that don’t matter. So, you have leaf nodes

- Merging sorted lists of size . Here, you must work backwards. If you give an order of insertion, only one pair of lists will satisfy (assuming distinct numbers). But how large is the input? Well, you can imagine splitting apart a sorted sequence of size . You can do this in different ways.

- sorting: there are inputs, but you can show that there are different valid sort outputs. You can imagine turning this relationship around and saying that there are different inputs that reach one output. Therefore, the number of leaf nodes is

From the number of leaf nodes, we just take the logarithm to find the depth of the tree

At the end, you will get an expression that should be placed in , as it is the lower bound.

Another philosophy

Just look at the number of outputs an algorithm can make

- In sorting, it’s just

- In select top-K, sorted, it’s just , because you pick the top k, and then you sort

- In select top-K unsorted, it’s just

- In merging sorted lists, the algorithm picks which ones to merge at what time, so it’s just

- In sorting, there are possible correct outputs, so you can imagine the best algorithm as taking advantage of the multiple paths and pushing different inputs down one solution path. Therefore, there are leaf nodes.