Complexity

| Tags | CS161Complexity |

|---|

Some confusion

When you want to prove that something is , we want to say that it holds in the worst case. When you want to disapprove that something is , you just need to find one example where it doesn’t hold.

Remember your first order logic. If the statement is “there exists a tree such that , the disapproval of that statement is “there exists no tree such that . It is NOT “there exists a tree such that . This is quite critical!!

Worst case analysis

This general paradigm is as such: we have an adversary who wants to find the worst possible input for our algorithm. With input sizes of , we denote the worst possible time as . So for example, in a sorting algorithm a typical worst case would be an inverse sort where everything is sorted but backwards.

We will use in asymptotic analysis, which is detailed below.

Asymptotic analysis

The big idea is that we look at how behaves for larger and larger , which allows us to disregard everything except for the largest term.

The big pro is that it’s simple and can allow us to compare algorithms, but the con is that it may not represent what we want. For example, 10000000n is “better” than

Big O

We seek to answer an important question about our algorithm: how does it perform in the worst possible case?

This is where the big notation comes in.

The informal definition

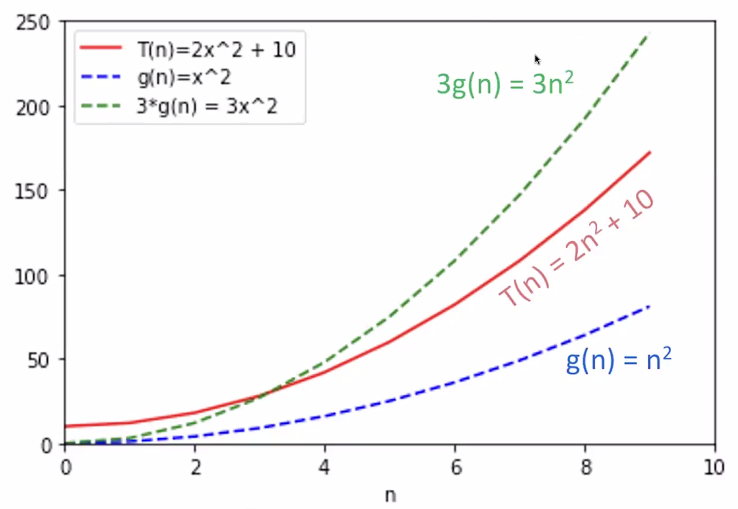

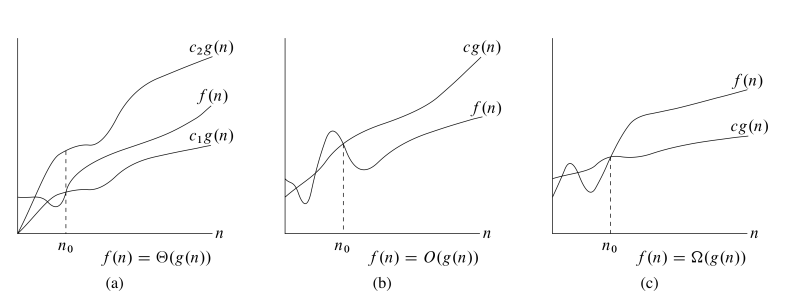

If is the runtime and is some simple function, then we say that if some constant scaling of outruns past a certain point.

So for example, we say that in the case above would be

Formal definition

Actually, we have much of the framework down already. if

“There exists some such that for all larger than some constant , the runtime is less than ”

You can chose other values of and . Generally speaking, the larger the , the smaller the

Big Omega

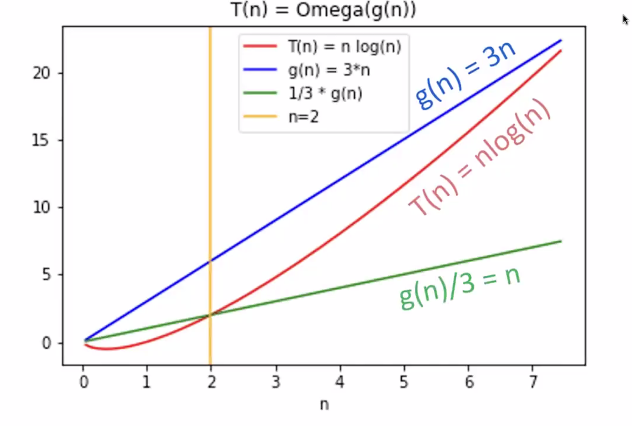

While we started with big O notation as the upper bound, we can also talk about a lower bound such that will outrun past a certain point.

For example, is , which is shown graphically below

Formalism

It’s very similar. is if

It should be obvious, but if , then .

Big Theta

We say that is if is and .

Now, this doesn’t mean that !!! In fact, . This is because at lower constants , at sufficiently high , while at larger constants, it’s the other way around. Mathematically, it’s because the intersection is a quadratic, and depending on what the is, the roots behave differently.

But the general upshot is that only the largest coefficients seem to matter. More on this to come

Solving for

The easiest way is to set of an inequality, like , and then you do some algebra. For example, you might get . Then, you assign some arbitrary , and then find a value of that satisfies this expression. The key insight is that, algebraically, there are infinitely many solutions so you must assign something arbitrarily and solve for the other one

Tightness of asymptotic relationships

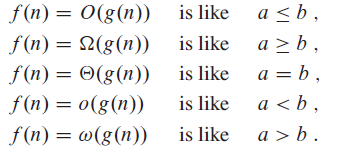

We call a bound asymptotically tight if it’s possible to “touch” the bound. For example, is asymptotically tight. We define the little notation to represent bounds that are not asymptotically tight, like . More formally:

In other words, no matter how flat or steep we transform this bound function , it is possible to find a point where does not “touch” the bound again. This is typically a stricter restiction, and as , and become infinitely far apart (which is not guarenteed in asympotitcally tight bounds)

There exists a similar system for notation, where it’s the same as above but just flipped.

Asymptotics in equations and inequalities

Let’s just take a moment to put our hand down on the conventions, because this thing can be confusing. For example, we said something like . But what does this even mean? Well, this can be thought of as a set, and this equals sign is a membership: .

However, in an equation, it’s more of a shorthand anonymous function. For example, , where we collapsed down the term and all its lessers. We can also understand it as , where .

For equations like , we say that there is some way of making two functions of order n and n^2 such that this equation is satisfied.

A great diagram

And a good intuition

Proof techniques

The simple

To prove that something is of a certain or , it’s an existence proof. Just find the and the . And this is what a formal proof should look like. Instead of saying something like “the log grows slower than the ,” find these two values and it will be true.

To prove that something is not a certain , we can use a proof by contradiction. Start by assuming that there exists some and then show that it’s impossible

Important note

Because this is an existence proof, you need to specify values of directly. Then, you can move around the until you get a bound for , and this should check out with what you defined to be. You shouldn’t start by solving for and then determining what should be. This step can be done first, but then you erase these steps and write a new proof that assumes you have the information at the beginning.

The abstract

Here’s the key that may be confusing. You don’t need to solve for actual values! This is expecially relevant if one of your functions had an anonymous function involved, like .

How would you go about proving that ? Well, we use the facts

- (definition)

- (moving into equation)

-

- And let’s use

- , which is what we wanted to show

Do you see how we used this single inequality and propagated it through?

Logical fallacy

When we go to prove complexity, we often require induction. You must pick the constants BEFORE you do the induction. In other words, this is incorrect as the inductive hypothesis: (if we’re proving )

For every , there exists a such that

This is because you can easily define a for every !! Just make it as large as you want at each time step. The key point is that you should be picking FIRST. This is what a real inductive hypothesis looks like

There exists a such that for every , .

Subtle difference but very important!

Side note on induction

Induction proofs can go wrong in a few ways. The classical (and most tricky) way that it gets wrong is during the induction step, it uses an inductive hypothesis that we haven’t assumed yet.

The inductive hypothesis can be wrong WRT the problem, but that’s not a problem of the induction itself. For example, we might use our first example (logical fallacy) and prove something by induction. That proof would be flawless, but the end upshot wouldn’t be.